September 22, 2021

Turn DRYiCE Lucy into a Voice Agent by Combining Lucy’s API with Twilio Programmable Voice

DRYiCE Lucy conversational AI provides an excellent platform for automating a wide variety of use cases where users are looking for an immediate resolution, however there are several more scenarios which are ripe for automation by extending virtual agents behind a toll-free number through voice.

There are two immediate advantages for our customers and end users:

- Automate existing conversations (on existing channels such as web / Teams) over phone

- Users can just pick up the phone and expect the same experience while avoiding a jungle of menu options

DRYiCE Lucy’s(https://www.dryice.ai/products-and-platforms/lucy) Bot Connect API can fulfil this exact requirement to build integrated solutions such as the one we discuss in this post. We’ll be using Twilio’s programmable voice APIs (https://www.twilio.com/voice) for speech to text and text to speech functionality.

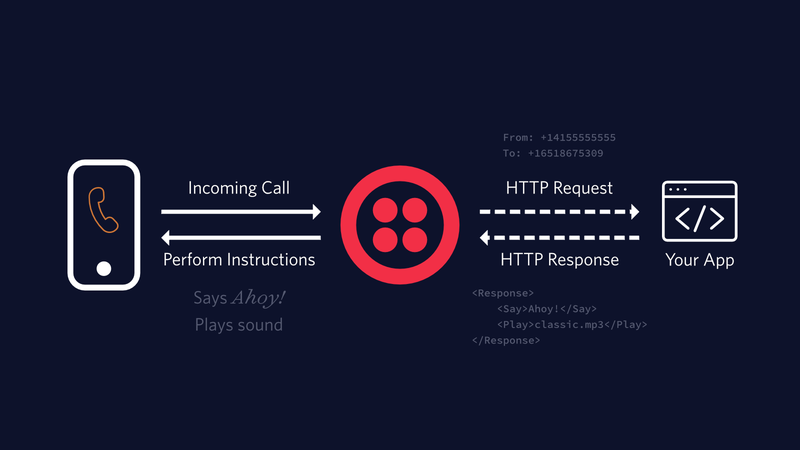

The high-level flow resembles the following illustration where ‘Your App’ would be a relay server talking to Lucy:

Source: https://www.twilio.com/docs/voice/tutorials/how-to-respond-to-incoming-phone-calls-node-js

To summarize, a simplified scenario:

- User calls a number (provided by Twilio)

- Twilio makes request to your application

- Your application sends back a custom TWiML (https://www.twilio.com/docs/voice/twiml) document to Twilio

- Twilio renders the response over speech to the User

Understanding Redirection with DRYiCE Lucy

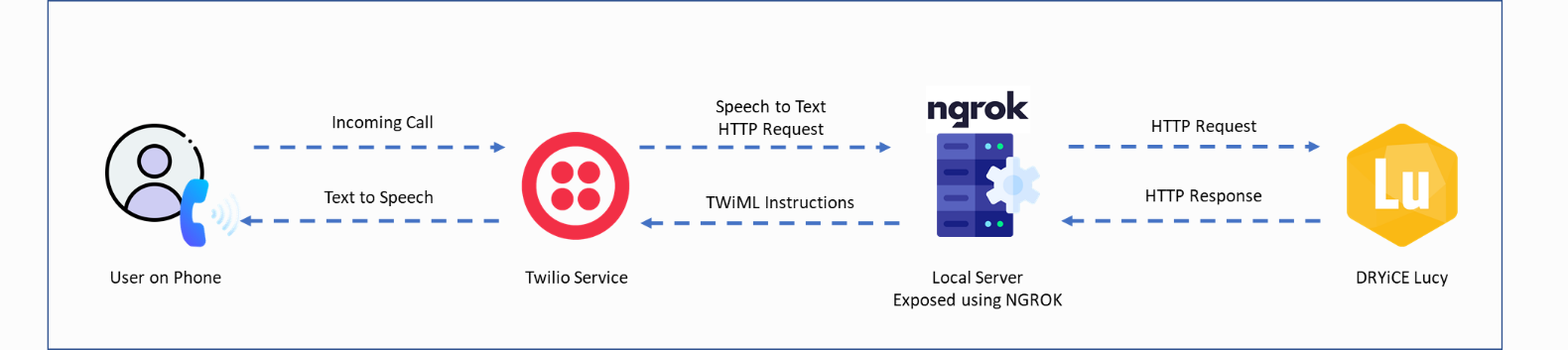

Let’s jump into re-creating the same setup for Lucy and it would look like the following flow:

Understanding the call flow above:

- User calls a number (provided by Twilio)

- Twilio makes request to our local relay server, which we have exposed using ngrok (https://ngrok.com)

- Our relay server makes a call to Lucy to fetch the response from the bot connect API

- Lucy sends back the response to our relay server, which would be the initial greeting

- Once the response is received, we save the conversation context which we will use in our subsequent requests to Lucy

- Our relay server sends back a custom TWiML (https://www.twilio.com/docs/voice/twiml) document to Twilio with specific components

- Lucy response to the initial request, which would be the greeting in this case

- Redirection instructions, on where to go next

- Twilio renders the response over speech to the User

- Twilio waits for user’s speech input

Pre-requisites

- Lucy Conversational AI Instance

- Existing OTB (out of the box) / custom use cases configured

- Bot Connect API Enabled

- Lucy instance Authentication

- Twilio Account

- Twilio Number with Voice Enabled & sufficient balance

- CallSid & Auth Token

- Configured ngrok tunnel URL in Twilio Console

Implementing Voice Interpreter in Express

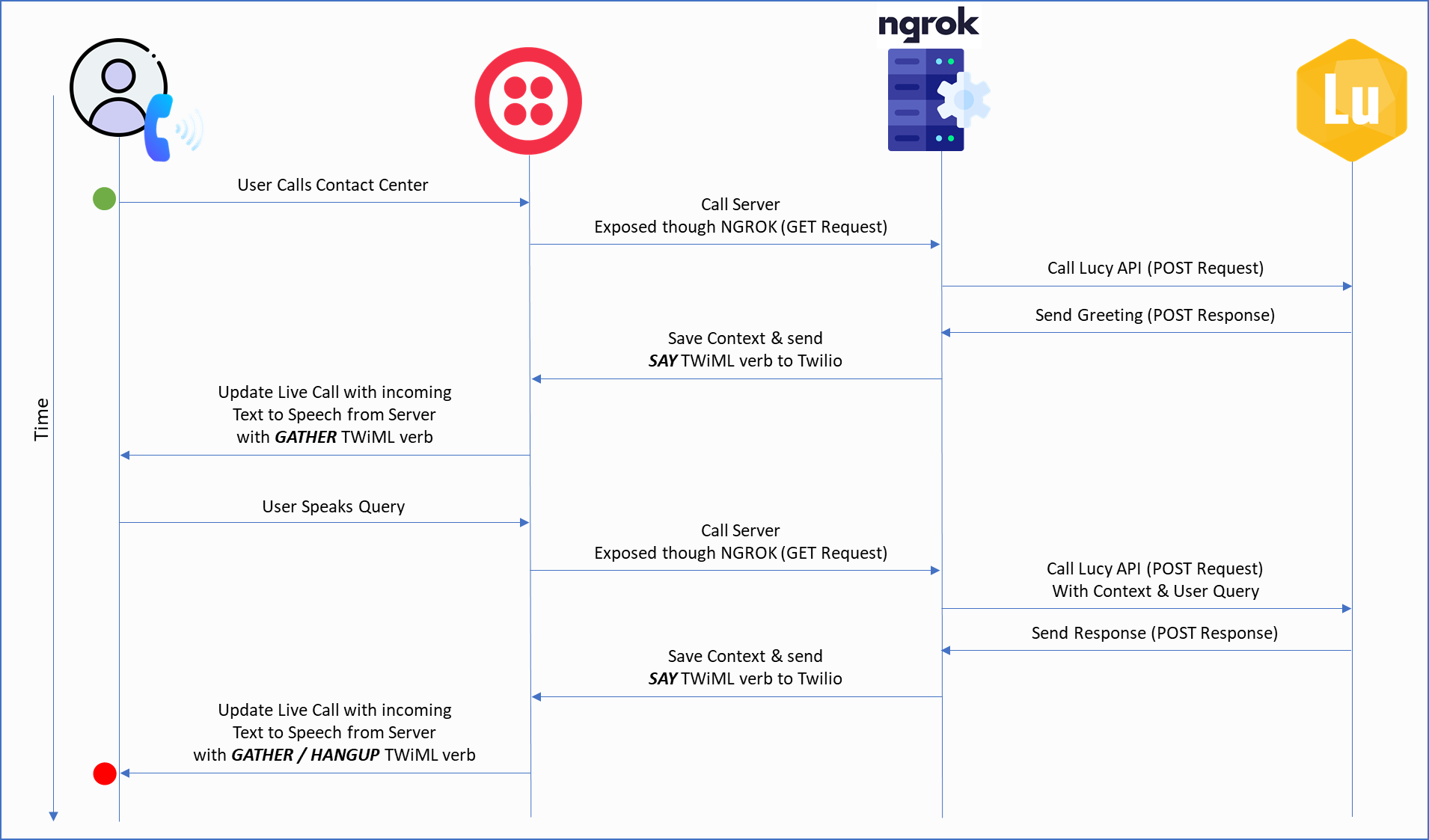

This exercise is to essentially extend Lucy’s textual chat over to voice when a user calls Lucy or a central support number. We’ll be creating an express(https://expressjs.com) server to provide all relay functionality while conversing with Lucy & Twilio. The following state diagram will provide a good outline to our code.

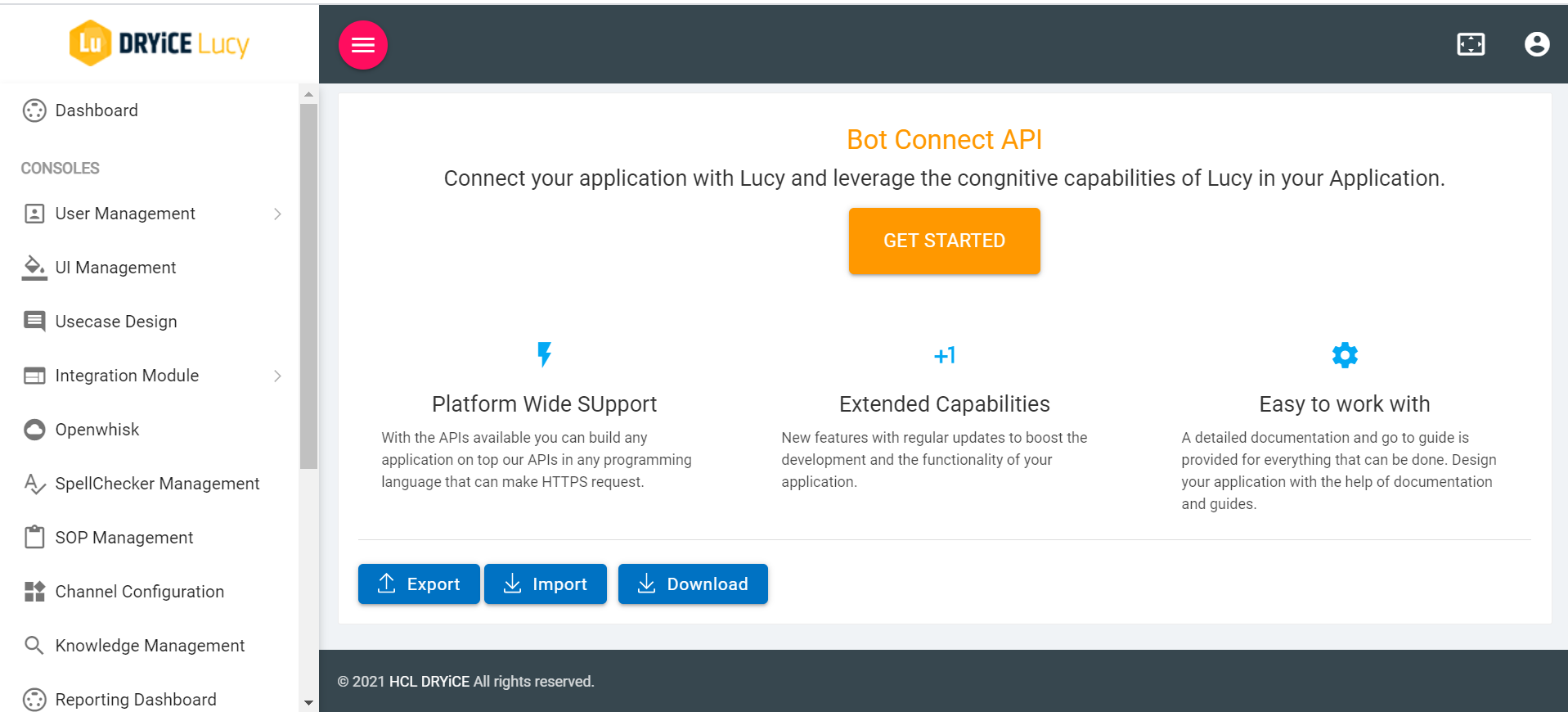

Enabling Lucy’s API

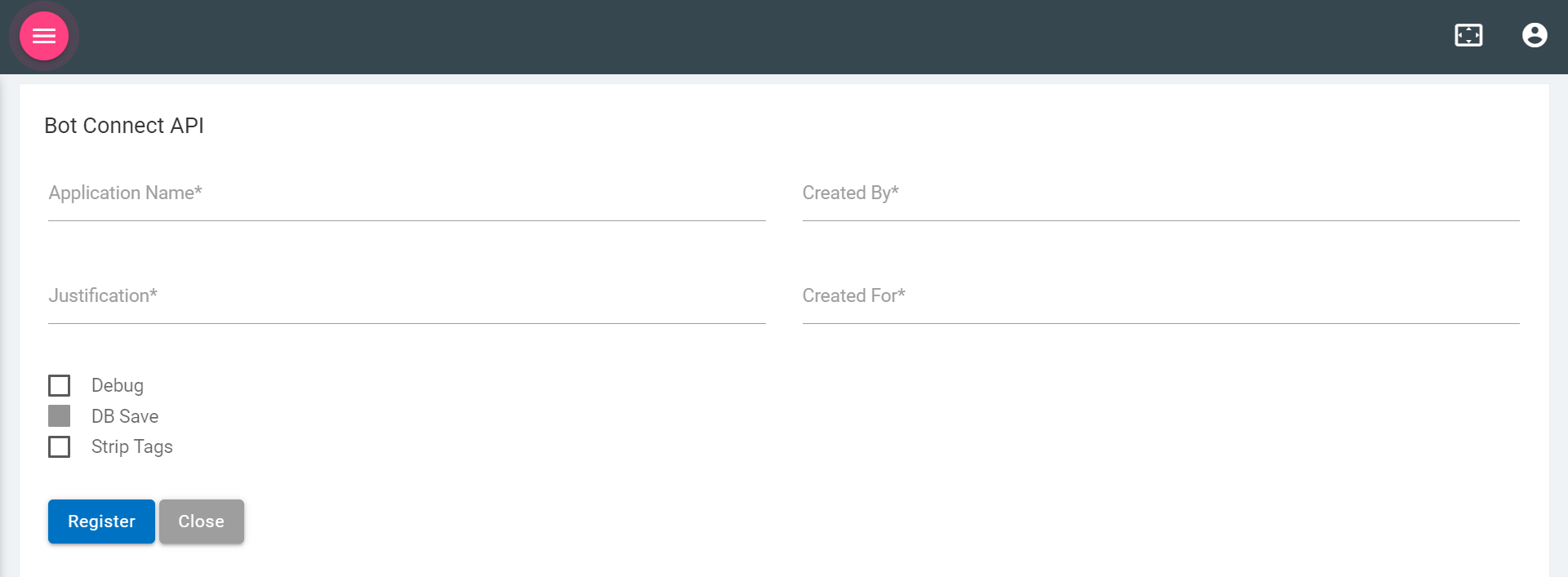

You can do this on your Lucy instance by going to Manage Console → BOT Connect API → Registering Your Application

Once registered, Lucy will generate a Key ID & Secret for you to use in your application authentication.

Creating an Express Server

const express = require('express')

const twilio = require('twilio')

const VoiceResponse = require('twilio').twiml.VoiceResponse

const env = require('./environment')

const axios = require('./axios').default

const client = twilio(env.accountSid, env.authToken);

app = express()

app.use(express.urlencoded({ extended: false }))

app.use(express.json())

We also load common modules such as:

- Axios for making HTTP requests (https://www.npmjs.com/package/axios)

- Twilio (https://www.npmjs.com/package/twilio)

- Nodemon for watching changes in our server(https://www.npmjs.com/package/nodemon)

- Our server should be able to parse requests body, thereby we use express.urlencoded

Handling Incoming Requests to Twilio

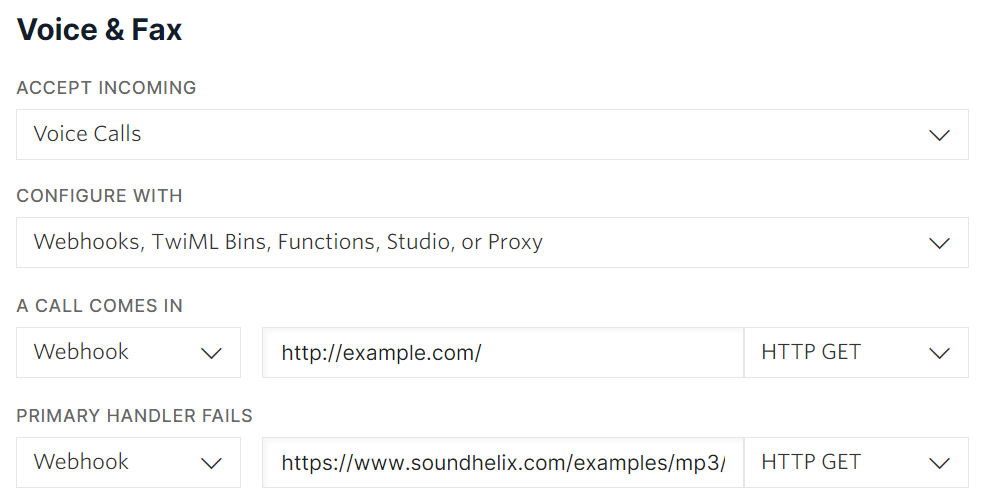

We need to update a webhook with the ngrok forwarding address in the Twilio console, for user calls to be answered correctly. You can also setup a failure handler in case our relay server does not respond at the time of the request.

Fetching Response from Lucy and Reading Back to User

This GET request is called when Twilio sends the caller’s request to our server, which in turn calls Lucy, fetches its response and responds with a TWiML document containing the SAY verb for Twilio to take necessary action. This is the part where text to speech is being executed.

It also includes another XML (TWiML) instruction to redirect to /getUserResponse once Twilio stops speaking. Remember, Twilio expects a valid TWiML document and so we send the entire document through res.send.

The most important component of this and other requests are the VoiceResponse() objects, which provides the framework for talking with Twilio. You can also embed direct TWiML in the response as opposed to using the library.

This GET requests works in all subsequent calls to Lucy, although with changing context that we save on every request. You can see the helper code here(https://github.com/hydroweaver/LucyandTwilio).

app.get('/', (req, res) {

axios.post(env.lucyEndpoint, raw, requestOptions)

.then(response => {

callCount += 1;

context = response.data;

console.log(response.data.output);

const apiToVoiceResponse = new VoiceResponse();

apiToVoiceResponse.say(response.data.output.text[0]);

apiToVoiceResponse.redirect('/getUserResponse');

res.send(apiToVoiceResponse.toString());

});

})

Collecting User Voice for Bi-Directional Communication

Once we have initial contact with Lucy, it will ask for user’s query, which they’ll say into the call, this is collected using the GATHER verb, which uses speech to text to convert user’s voice utterances and converts them to text. One of the key variables to tweak with would be speechTimeout which would be based on request – response latency for fine tuned response and minimum waiting time for the end user.

Once the transcription (speech to text conversion) is complete, Twilio will post the result to /transcribed end point for us to take further action.

app.post('/getUserResponse', (req, res) => {

const response = new VoiceResponse();

response.gather({

input: 'speech',

speechTimeout: 3,

// finishOnKey: '#',

speechModel: 'phone_call',

enhanced: 'true',

action: '/transcribed'

})

res.send(response.toString());

})

Updating Live Call to Get Lucy’s Response to Transcribed Query

Once we collect and transcribe user’s voice, we need to send it to Lucy again, for it to use its natural language processing, understand the input to extract correct intent and respond accordingly. To do this, once the call reached /transcribed endpoint, we update the live call using client.call(callSid) method and redirect the call to our initial GET request with the latest context & user query.

callSid is extracted from the latest call to the transcription end point which will also have the transcribed user query.

app.post('/transcribed', (req, res) => {

transcribedText = req.body.SpeechResult;

const callSid = req.body.CallSid;

client.calls(callSid)

.update({method: 'GET', url: env.callUpdateURL})

.then(call => console.log(call.to));

})

And that’s it! This call will sustain itself back and forth until the end of the conversation.

Lucy will hang up the call if you have updated the initial GET request when it reaches the end of conversation or if the user ends the call from their side. This can be done by using the HANGUP verb in TWiML response to Twilio. We have not added a HANGUP condition here in the interest of keeping it simple.

End user’s experience is dependent on how the use case flow has been configured and robustness of the fallbacks. We hope you enjoyed reading through this post and would be glad to hear about your experience and the solutions you envision with such integrations.

You can find more about our conversational AI platform – DRYiCE Lucy here: https://www.dryice.ai/products-and-platforms/lucy

Karan Verma

Karan Verma leads the product management for DRYiCE Lucy. He has over 10 years of experience in product management, solution engineering, and product evangelism.